Cosmic Structure & Modified Gravity

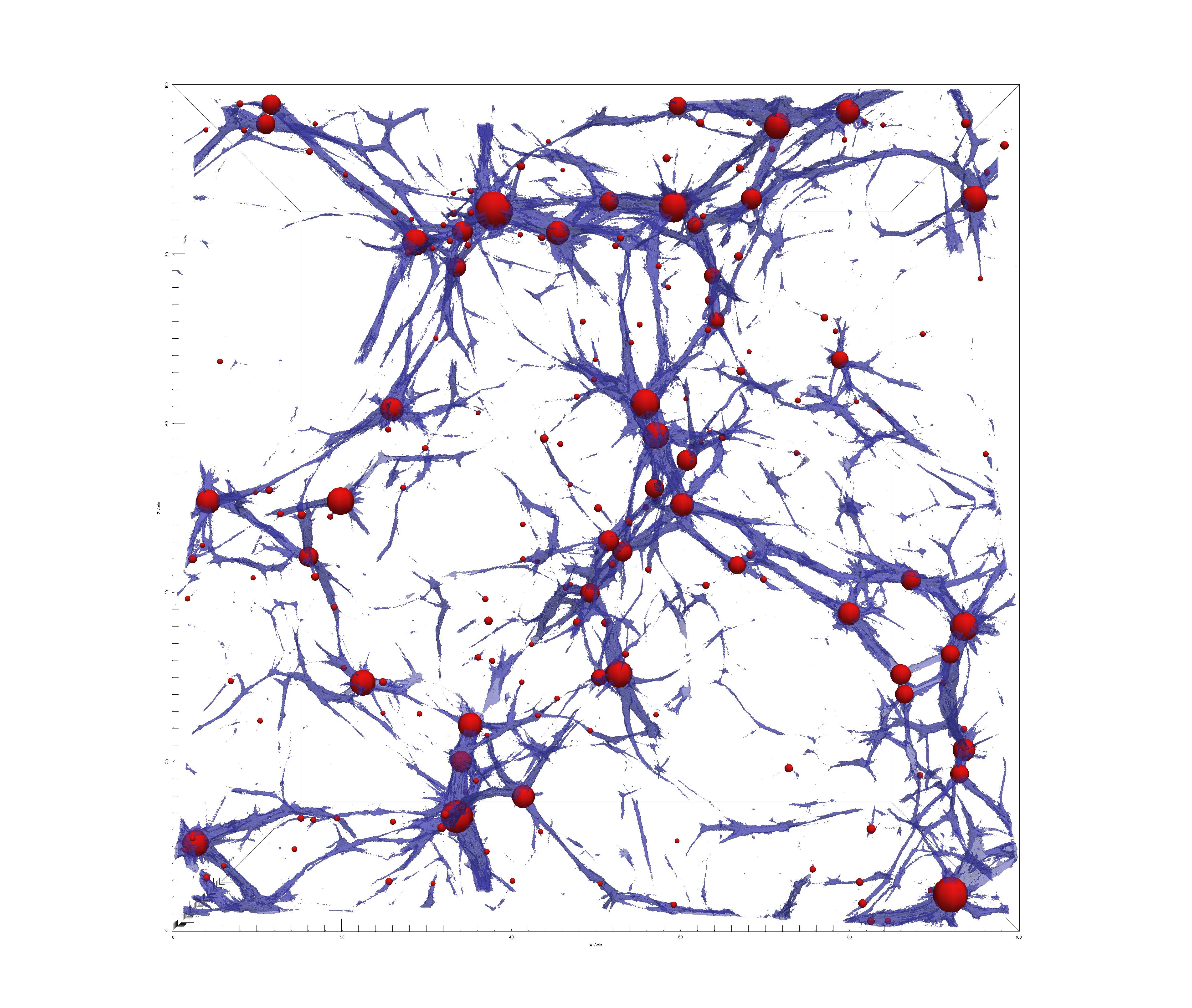

The large-scale structure of the Universe, often referred to as the Cosmic Web, is a complex network of dark matter haloes, filaments, and sheets, embedded within vast cosmic voids. Understanding the formation and evolution of this structure is a cornerstone of modern cosmology. Dark matter, comprising approximately 27% of the Universe’s mass-energy budget, plays a dominant role in gravitational collapse and structure formation, providing the scaffolding upon which galaxies and galaxy clusters form. Detailed characterization of the dark matter distribution, from its smooth initial state to its highly non-linear final configuration, is essential for testing the standard cosmological model and probing the fundamental properties of dark matter.

The standard Lambda-CDM model has been remarkably successful in explaining a wide range of cosmological observations. However, mysteries such as the nature of dark energy, responsible for the Universe’s accelerated expansion, prompt investigations into alternative gravitational theories, known as Modified Gravity. These theories propose deviations from General Relativity on cosmic scales, potentially altering the growth of structure and leaving distinct signatures in the Cosmic Web. Precisely modeling these effects, alongside the complex, multi-stream dynamics of dark matter, presents significant theoretical and computational challenges, requiring sophisticated numerical simulations and analytical tools.

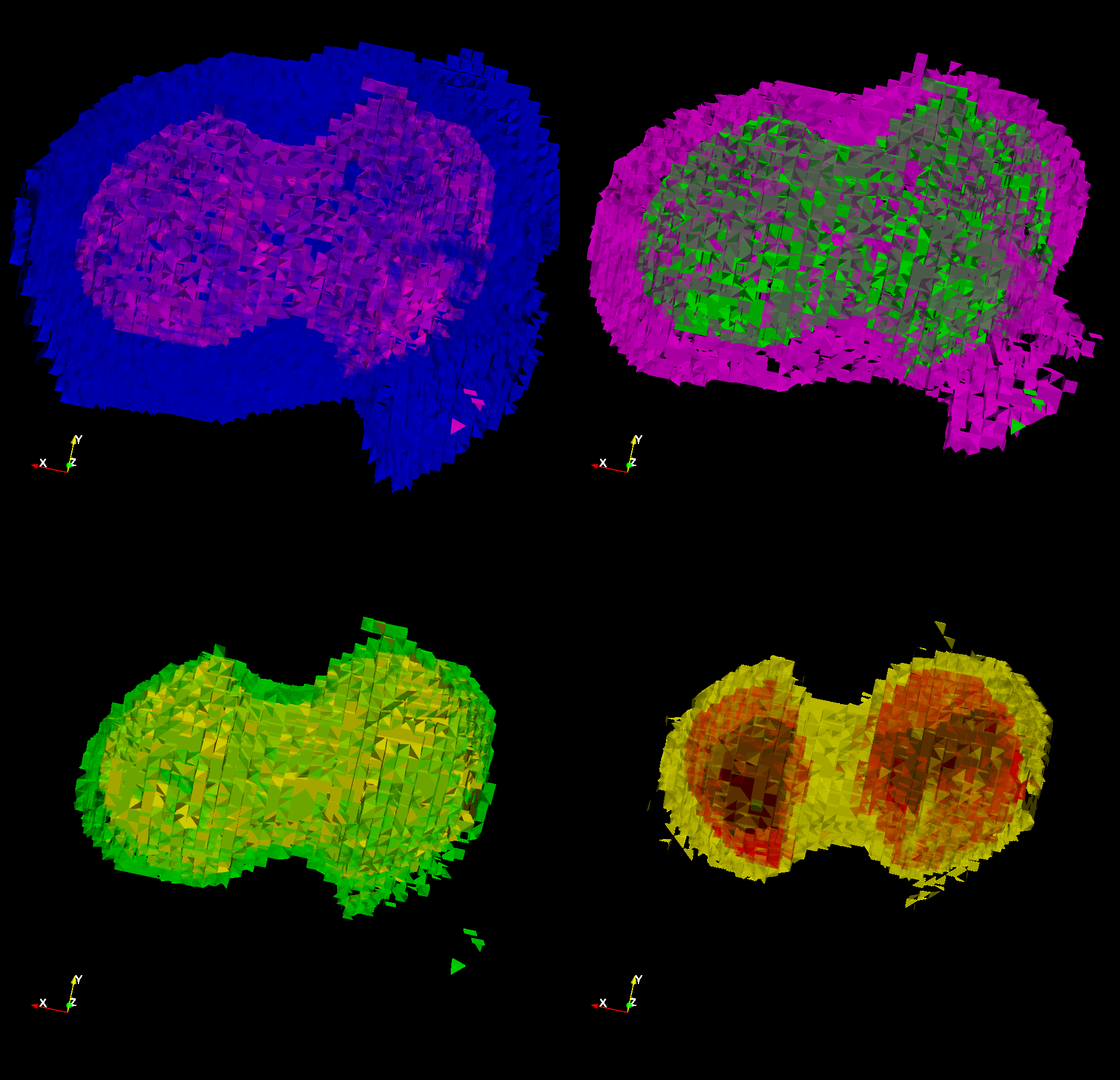

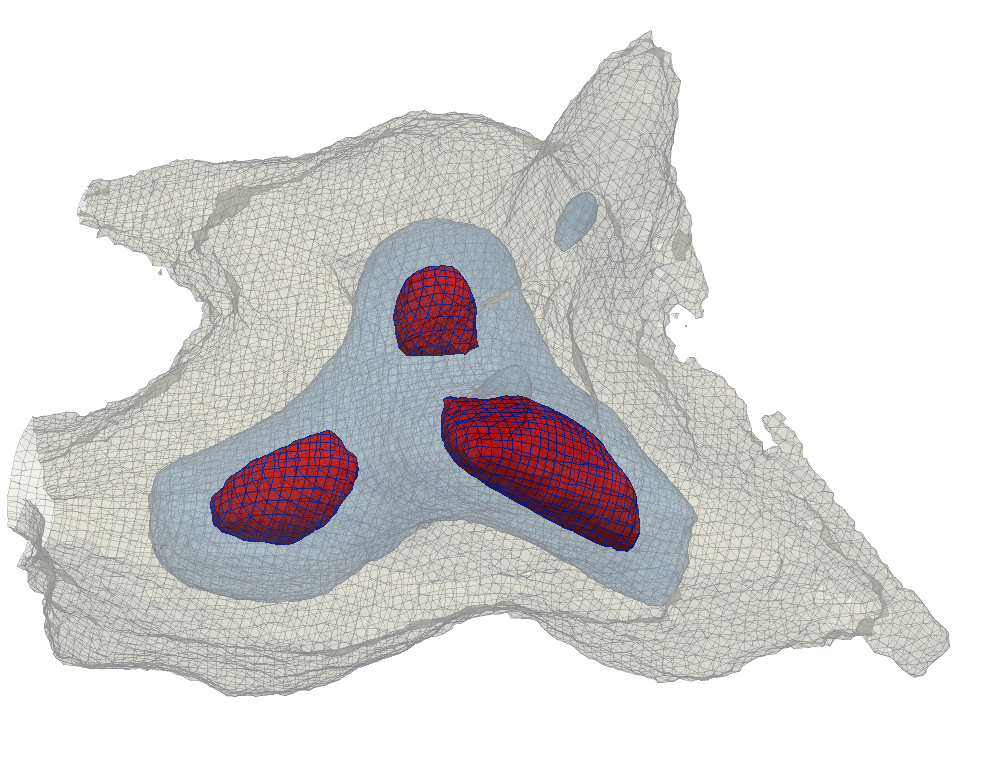

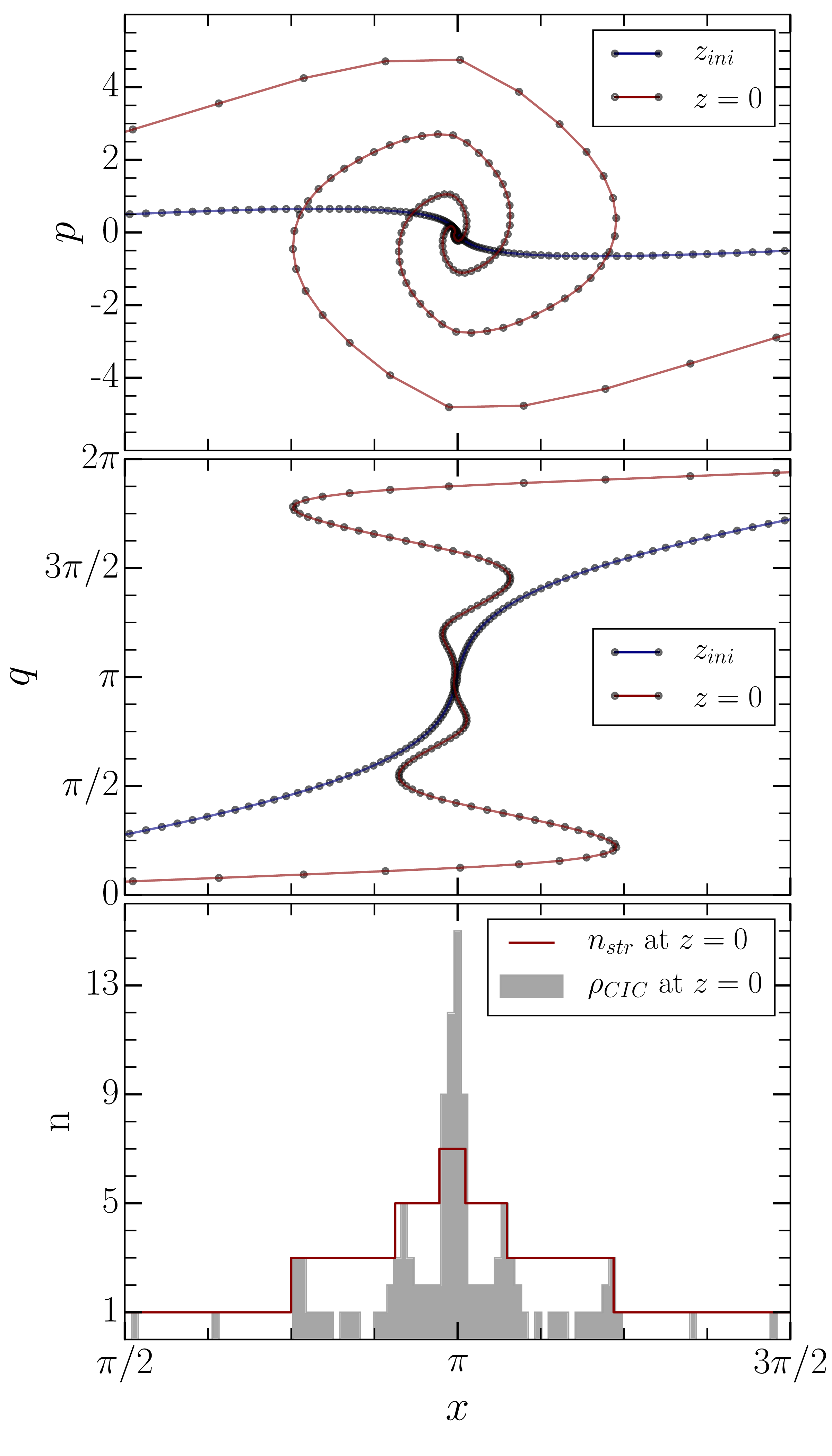

My research extensively explores the intricate multi-stream nature of the dark matter web, moving beyond simplified single-fluid descriptions. I have specifically investigated the “Caustic Design of the Dark Matter Web,” tracing particle trajectories to understand how the interweaving of multiple dark matter streams gives rise to the characteristic features of haloes and filaments. This work, detailed in papers such as “Dark matter haloes: a multistream view” and “Topology and geometry of the dark matter web: a multistream view,” characterizes the density, velocity, and velocity dispersion fields within these complex environments, revealing the formation of caustics – regions where dark matter streams cross and density peaks are amplified. This “Multi-stream portrait of the Cosmic web” provides a more complete and physically realistic picture of dark matter structures, critical for interpreting observational probes like gravitational lensing.

Furthermore, my research extends to the realm of Modified Gravity, specifically focusing on f(R) theories. To address the computational demands of exploring the parameter space of these alternative gravitational models, I developed a “Matter Power Spectrum Emulator for f(R) Modified Gravity Cosmologies.” This emulator efficiently and accurately predicts the matter power spectrum – a key statistical measure of cosmic structure – across a wide range of cosmological and f(R) parameters. This technical contribution is vital for efficiently comparing theoretical predictions from modified gravity models with observational data from large-scale structure surveys, allowing for robust constraints on deviations from General Relativity and refining our understanding of cosmic acceleration. The ultimate impact of this work is to provide rigorous tests of fundamental physics through the detailed study of the Cosmic Web.