Emulation & Inference

Scientific inquiry into complex physical phenomena often relies on high-fidelity simulations that are computationally intensive, presenting significant challenges for tasks such as uncertainty quantification, parameter inference, and real-time analysis. This computational bottleneck limits the ability to thoroughly explore vast parameter spaces, impeding scientific discovery and model validation across disciplines ranging from astrophysics to engineering. The development of efficient and accurate surrogate models, or emulators, has emerged as a critical solution to these challenges.

Emulators are data-driven approximations that learn the input-output relationship of complex simulations, providing predictions at a fraction of the computational cost. These models typically employ advanced machine learning techniques, such as neural networks and Gaussian processes, to build fast, statistical representations of the underlying physics. A crucial aspect of this field is the development of robust methodologies for quantifying the uncertainty associated with emulator predictions, which is vital for reliable scientific inference and decision-making.

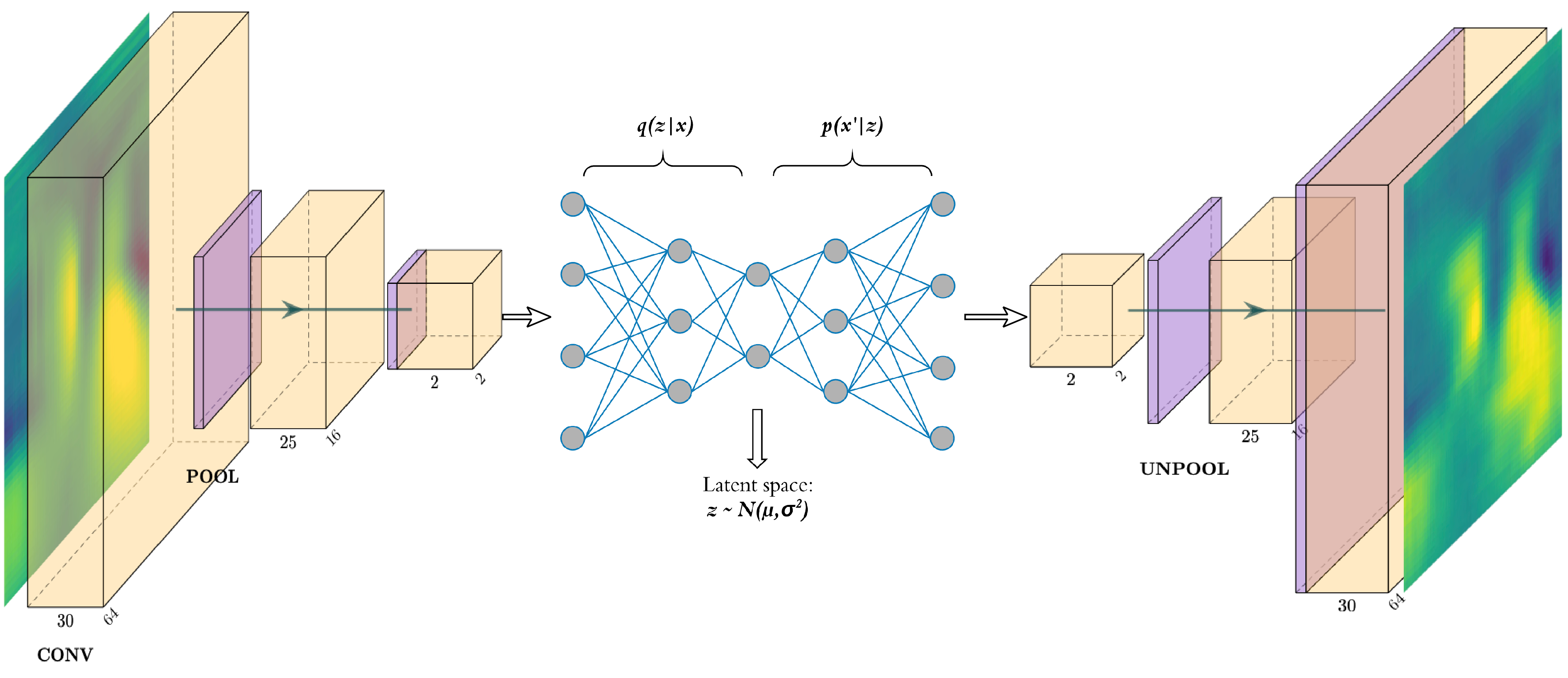

Furthermore, for high-dimensional systems like fluid flows, the integration of reduced-order modeling (ROM) techniques with emulation becomes essential. ROMs project the system’s dynamics onto a lower-dimensional subspace, significantly simplifying the problem while retaining the most important characteristics. Combining these with probabilistic machine learning methods allows for the creation of surrogates that not only accelerate predictions but also provide a principled framework for capturing and propagating uncertainties throughout the modeling process, thereby enhancing the utility and trustworthiness of these advanced computational tools.

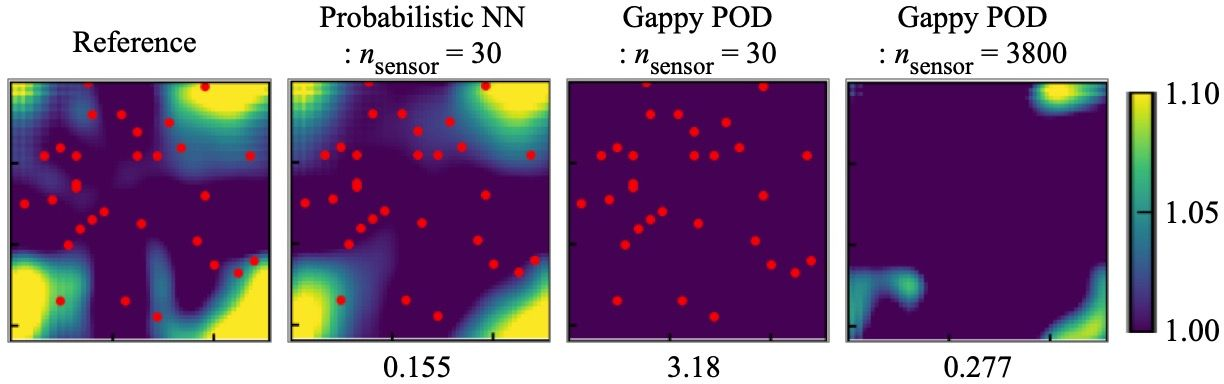

My work in this area has focused on developing and applying advanced emulation and inference techniques to bridge the gap between high-fidelity simulations and the demands of scientific exploration. I have specifically contributed to the creation of probabilistic neural network (PNN)-based reduced-order surrogates for complex fluid flows, enabling efficient forward predictions and robust data recovery from sparse measurements. Furthermore, I have developed methods for latent-space time evolution of non-intrusive reduced-order models using Gaussian process emulation, which significantly accelerates the dynamic prediction of high-dimensional systems by learning their evolution in a compact, lower-dimensional representation.

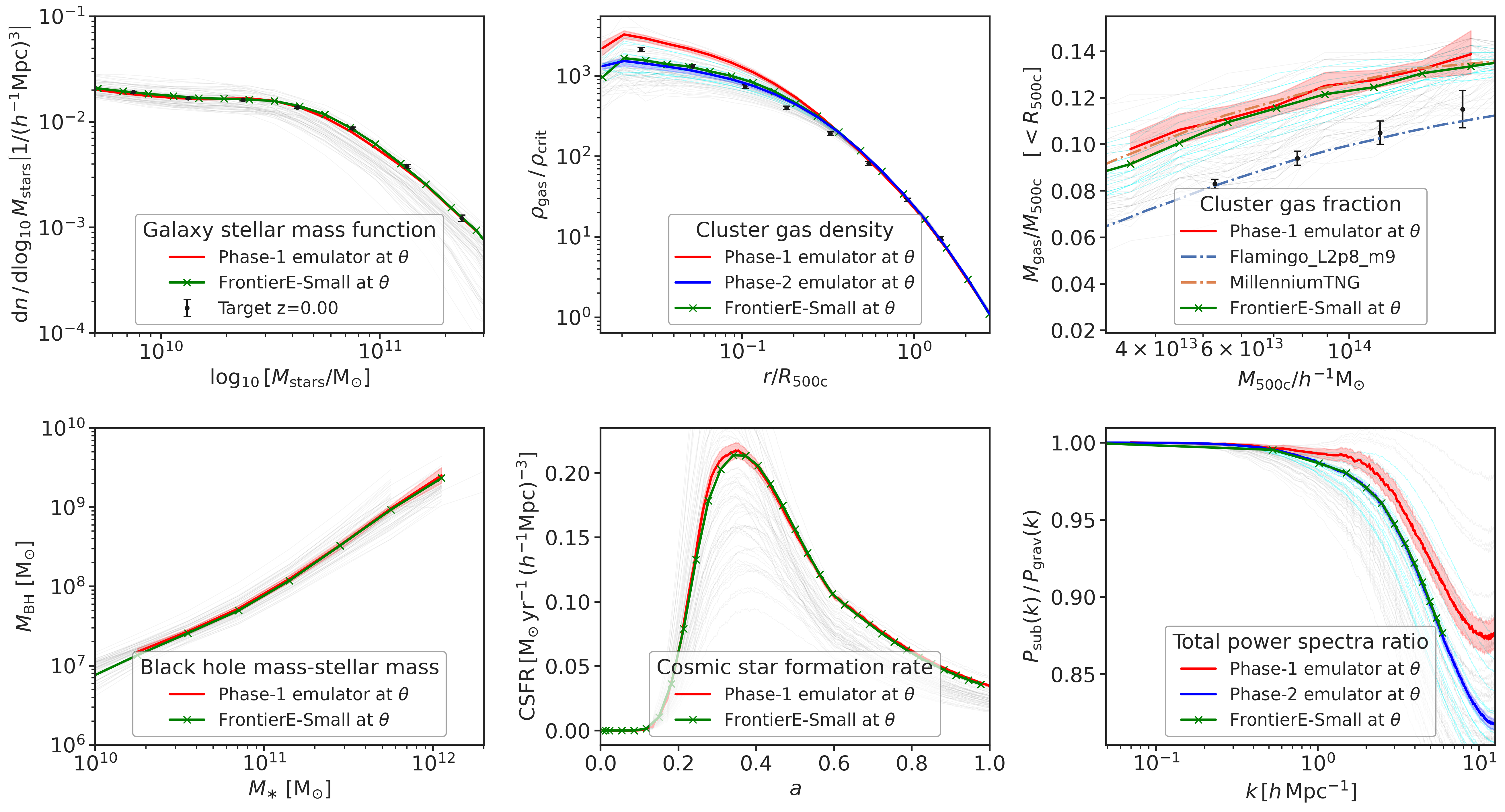

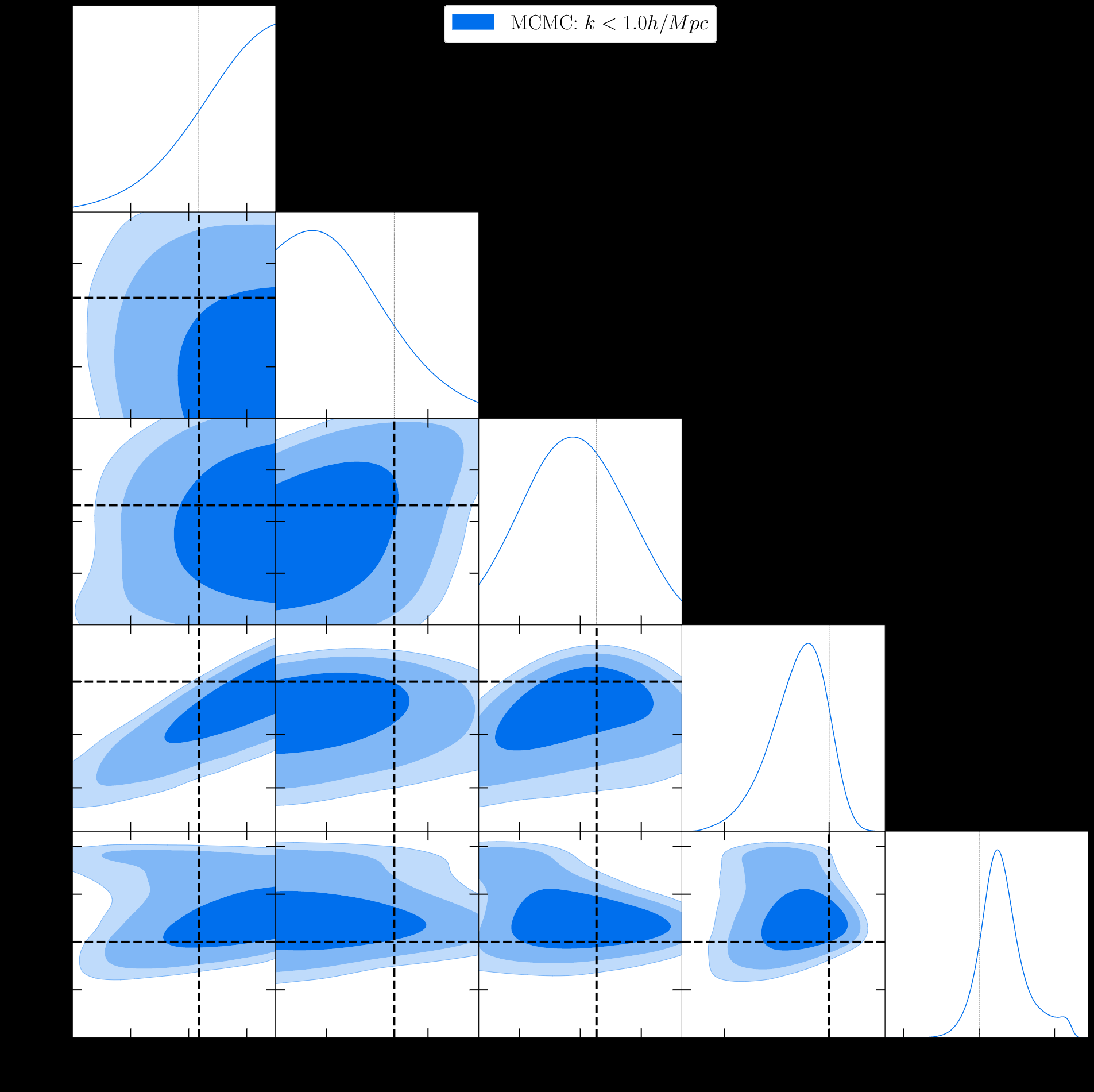

Beyond fluid dynamics, I have applied these sophisticated emulation frameworks to address critical problems in cosmology. This includes the development of an emulator for the matter power spectrum in f(R) modified gravity cosmologies, which dramatically speeds up the exploration of alternative gravitational theories. Additionally, I have leveraged emulator-based inference to efficiently constrain and understand cosmological subgrid models, thereby enabling robust statistical analysis and enhancing our ability to extract cosmological parameters from observational data, all while rigorously quantifying uncertainties and significantly reducing computational barriers.